It's fascinating how relevant much of [this 1960 paper] still is today, especially considering the limited computing power available 55 years ago.

Earlier this month I tweeted "When people write about AI like it's this brand new thing, should I be amused, feel old, or both?" The tweet linked to a recent Harvard Business Review article called Data Scientists Don't Scale about the things that Artificial Intelligence is currently doing, which just happened to be the things that the author of the article's automated prose-generation company is doing.

The article provided absolutely no historical context to this phrase that has thrilled, annoyed, and fascinated people since the term was first coined by John McCarthy in 1955. (For a little historical context, this was two years after Dwight Eisenhower succeeded Harry Truman as President of the United States. Three years later, McCarthy invented Lisp—a programming language that, besides providing the basis of other popular languages such as Scheme and the currently very hot Clojure, is still used today.) I recently came across a link to the seminal 1960 paper Steps Toward Artificial Intelligence by AI pioneer Marvin Minsky, who was there at the beginning in 1955, and so I read it on a long plane ride. It's fascinating how relevant much of it still is today, especially when you take into account the limited computing power available 55 years ago.

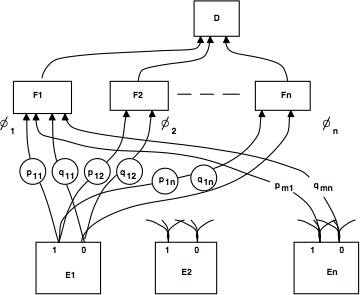

After enumerating the five basic categories of "making computers solve really difficult problems" (search, pattern-recognition, learning, planning, and induction), the paper mentions several algorithms that are still considered to be basic tools in Machine Learning toolboxes: hill climbing, naive Bayesian classification, perceptrons, reinforcement learning, and neural nets. He mentions that one part of Bayesian classification "can be made by a simple network device" that he illustrates with this diagram:

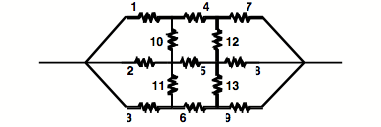

It's wild to consider that the software possibilities were so limited at the time that implementing some of these ideas were easier by just building specialized hardware. Minksy also describes the implementation of a certain math game by a network of resistors as designed by Claude Shannon (who I was happy to hear mentioned in the season 1 finale of Silicon Valley):

Minsky's paper also references the work of B.F. Skinner, of Skinner box fame, when describing reinforcement learning, and it cites Noam Chomsky when describing inductive learning. I mention these two together because this past week I also read an interview that took place just three years ago titled Noam Chomsky on Where Artificial Intelligence Went Wrong. Describing those early days of AI research, the interview's introduction tells us how

Some of McCarthy's colleagues in neighboring departments, however, were more interested in how intelligence is implemented in humans (and other animals) first. Noam Chomsky and others worked on what became cognitive science, a field aimed at uncovering the mental representations and rules that underlie our perceptual and cognitive abilities. Chomsky and his colleagues had to overthrow the then-dominant paradigm of behaviorism, championed by Harvard psychologist B.F. Skinner, where animal behavior was reduced to a simple set of associations between an action and its subsequent reward or punishment. The undoing of Skinner's grip on psychology is commonly marked by Chomsky's 1959 critical review of Skinner's book Verbal Behavior, a book in which Skinner attempted to explain linguistic ability using behaviorist principles.

The introduction goes on to describe a 2011 symposium at MIT on "Brains, Minds and Machines," which "was meant to inspire multidisciplinary enthusiasm for the revival of the scientific question from which the field of artificial intelligence originated: how does intelligence work?"

Noam Chomsky, speaking in the symposium, wasn't so enthused. Chomsky critiqued the field of AI for adopting an approach reminiscent of behaviorism, except in more modern, computationally sophisticated form. Chomsky argued that the field's heavy use of statistical techniques to pick regularities in masses of data is unlikely to yield the explanatory insight that science ought to offer. For Chomsky, the "new AI" — focused on using statistical learning techniques to better mine and predict data — is unlikely to yield general principles about the nature of intelligent beings or about cognition.

The whole interview is worth reading. I'm not saying that I completely agree with Chomsky or completely disagree (as Google's Peter Norvig has in an essay that has the excellent URL http://norvig.com/chomsky.html but gets a little ad hominem when he starts comparing Chomsky to Bill O'Reilly), only that Minsky's 1960 paper and Chomsky's 2012 interview, taken together, provide a good perspective on where AI came from and the path it took to the roles it play today.

I'll closed with this nice quote from a discussion in Minsky's paper of what exactly "intelligence" is and whether machines are capable of it:

Programmers, too, know that there is never any "heart" in a program. There are high-level routines in each program, but all they do is dictate that "if such-and-such, then transfer to such-and-such a subroutine." And when we look at the low-level subroutines, which "actually do the work," we find senseless loops and sequences of trivial operations, merely carrying out the dictates of their superiors. The intelligence in such a system seems to be as intangible as becomes the meaning of a single common word when it is thoughtfully pronounced over and over again.

Please add any comments to this Google+ post.